Overview

AI has left the lab. It is reshaping how we live and work. To fully exploit its benefits, we must address critical gaps in trustworthiness. We work on Deploying General AI in the Private World.

AI’s transformative era. AI offers potential to address global challenges. It can increase productivity, support ageing populations, and tackle urgent issues like climate change. Since the introduction of ChatGPT, AI has left the lab. It is now used by billions worldwide and is shaping the economy and society. AI is no longer experimental; it is a real force driving change.

The rise of general-purpose AI. Recent developments have focused on general-purpose AI, often called foundational or frontier models. These are large models trained on vast data to perform a wide range of tasks without task-specific training. They have made impressive progress in language, vision, and multimodal reasoning. Yet they remain limited in reliably solving specific, real-world problems without substantial adaptation.

The adaptation gap. The key challenge we see today is the adaptation of general AI to private, real-world settings. General-purpose capabilities alone are insufficient. To close this gap, we identify three concrete challenges:

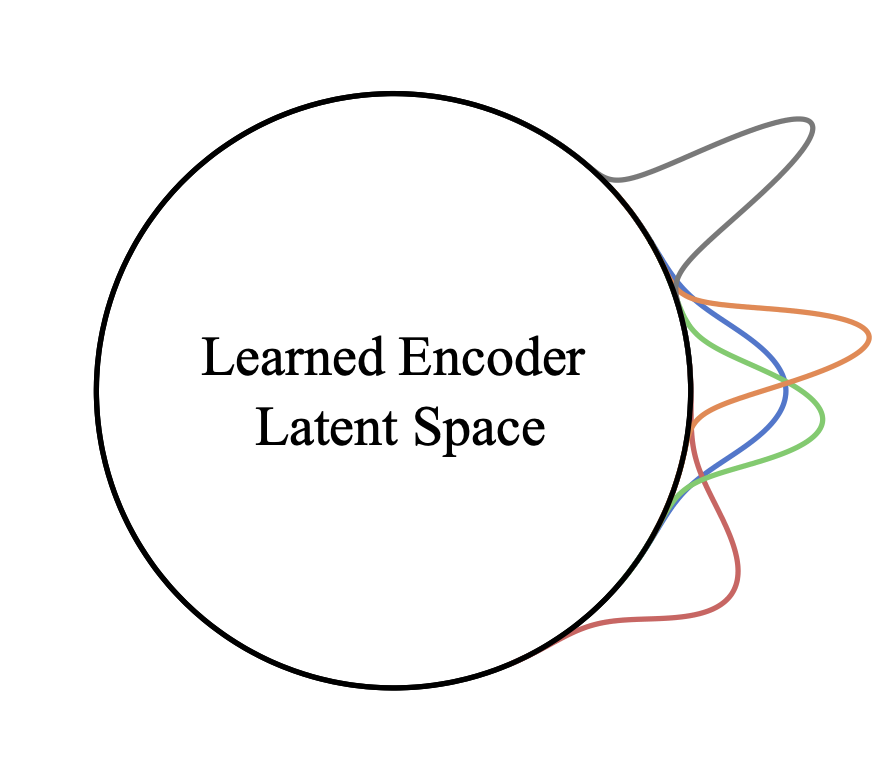

1. Effective communication of human intent. Even the smartest general AI does not perform well without appropriate context. We study novel interfaces for users to communicate their goals and implicit preferences more accurately and efficiently. We explore modular architectures to let models be more readily adapted to diverse user needs.

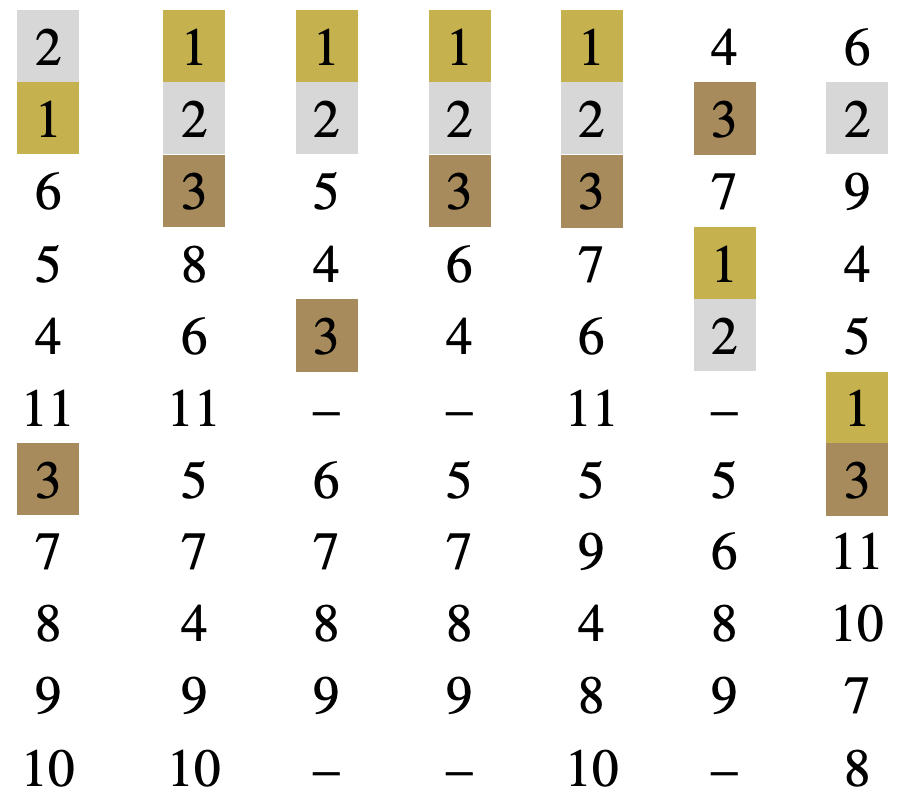

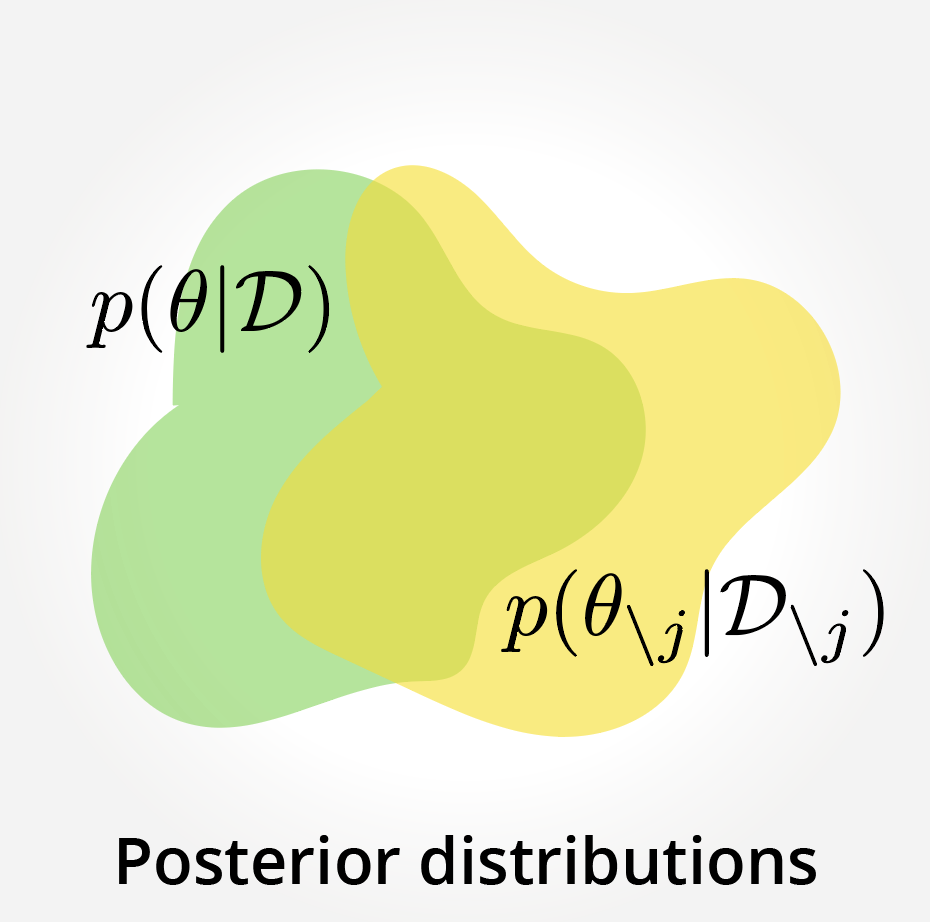

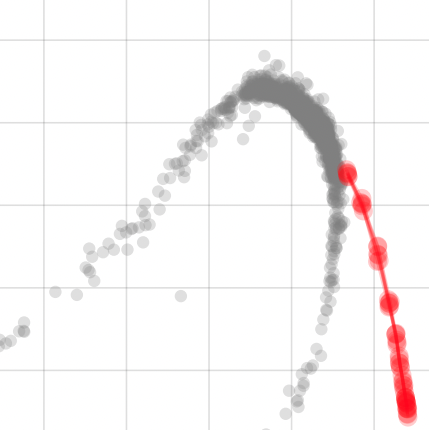

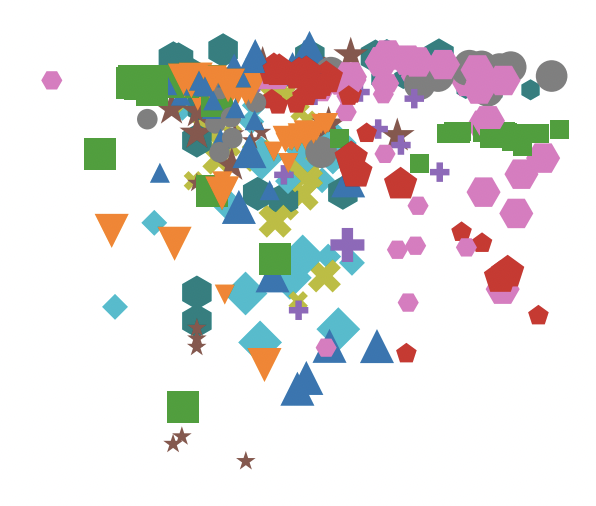

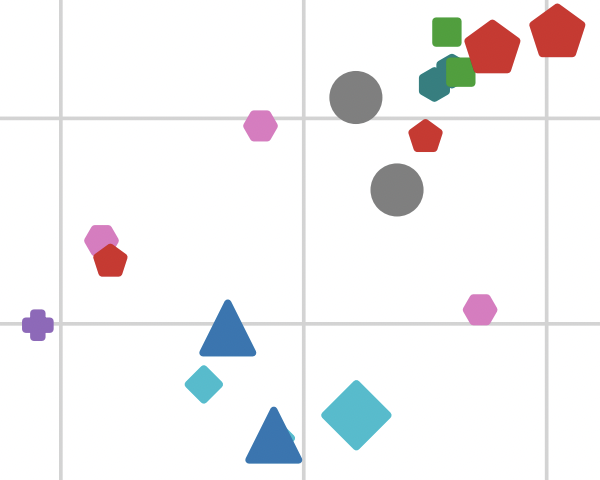

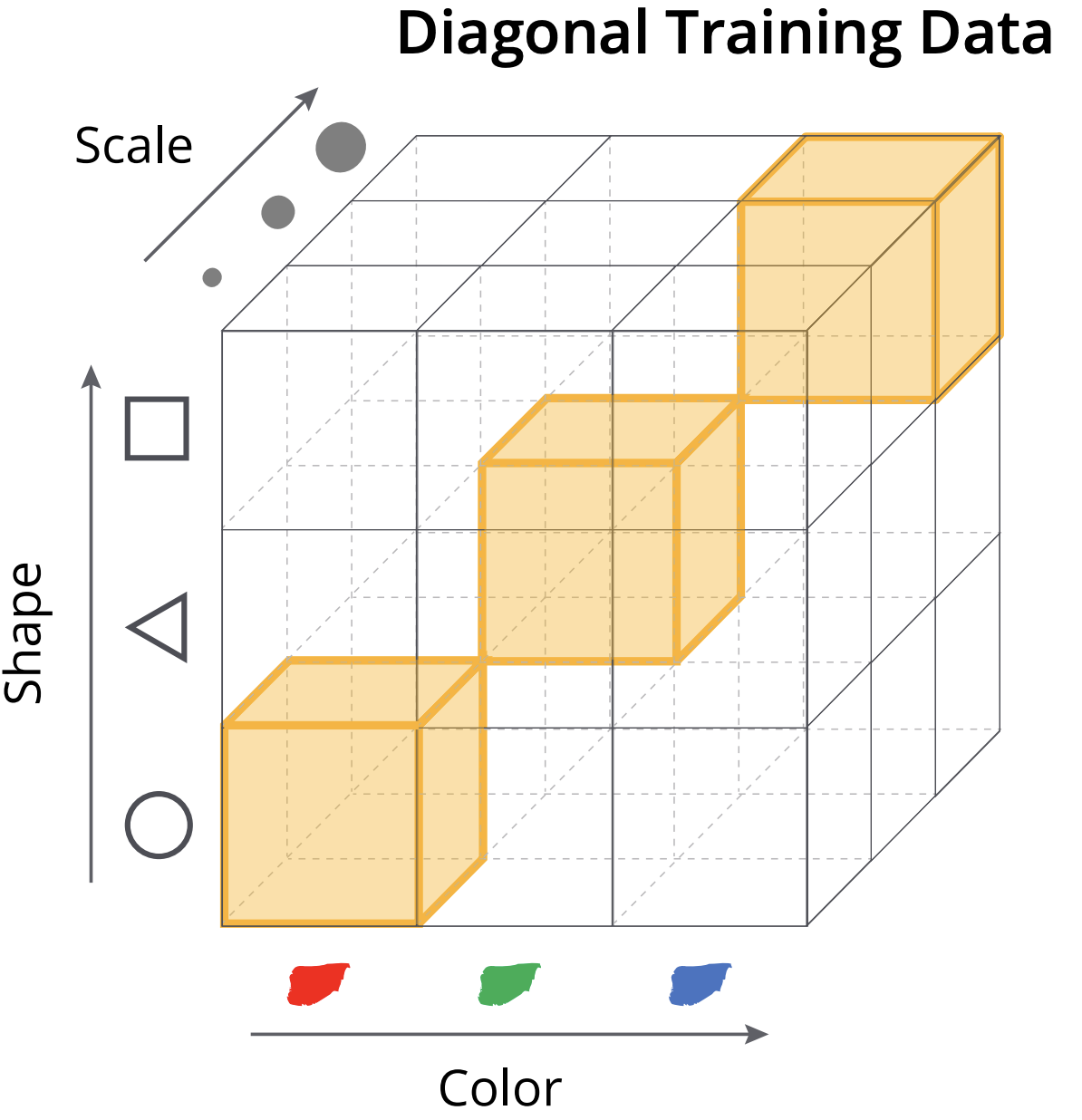

2. Human-AI feedback loops. For wider use, an AI system must be more debuggable. We believe the current focus of explainable AI (XAI) is skewed and impractical for inexperienced users. We identify data as a critical bridge in the human-AI feedback loop. We focus on training data attribution (TDA) methods to build transparent, actionable feedback loops for more intuitive model adaptation.

3. Safe and compliant deployment. AI increasingly manages sensitive data and critical decisions. Privacy, security, and regulatory compliance are no longer optional; they are essential for real-world deployment. We develop techniques to ensure AI systems remain secure and trustworthy.

By addressing these areas, we aim to reduce AI-related risks and maximise its societal benefits. Effective adaptation of general AI to private contexts will signal the true beginning of an AI-led industrial revolution.

Fortunately, we are not alone in this effort. There are many other research labs around the world that make important contributions on Trustworthy AI. Our group find our uniqueness by striving for working solutions that are widely applicable and can be deployed at a large-scale. We thus name our group Scalable Trustworthy AI. For impact at scale, we commit ourselves to the following principles:

- Simple is better than complex. Scalability and applicability are inversely correlated with complexity.

- Understand and then solve. You can only solve a problem when you understand it.

- Do not follow a dead end. When an approach is fundamentally limited in the long run, don’t take it.

For prospective students: You might be interested in our internal curriculum and guidelines for a PhD program: Principles for a PhD Program.

STAI group is part of the Tübingen AI Center and the University of Tübingen. STAI is also within the ecosystem of International Max Planck Research School for Intelligent Systems (IMPRS-IS) and the ELLIS Society.