Trustworthy Machine Learning

Winter Semester 2022-2023, University of Tübingen

Overview

Goal

- Students will be able to critically read, assess, and discuss research work in Trustworthy Machine Learning (TML).

- Students will gain the technical background to implement basic TML techniques in a deep learning framework.

- Students will be ready to conduct their own research in TML and make contributions to the research community.

Prerequisites

- Familiarity with Python and PyTorch coding.

- A pass grade from the Deep Learning Course (or equivalent).

- Basic knowledge of machine learning concepts.

- Basic maths: multivariate calculus, linear algebra, probability, statistics, and optimisation.

Reading list

Recommended papers from each topic.

▸ OOD Generalisation (click to expand)

- Generalizing from a Few Examples: A Survey on Few-Shot Learning

- Generalizing to Unseen Domains: A Survey on Domain Generalization

- In Search of Lost Domain Generalization

- Underspecification Presents Challenges for Credibility in Modern Machine Learning

- Shortcut Learning in Deep Neural Networks

- Which Shortcut Cues Will DNNs Choose? A Study from the Parameter-Space Perspective

- Environment Inference for Invariant Learning

- ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness.

▸ Explainability (click to expand)

- Explanation in artificial intelligence: Insights from the social sciences

- Sanity Checks for Saliency Maps

- A benchmark for interpretability methods in deep neural networks

- Expanding Explainability: Towards Social Transparency in AI systems

- Human-Centered Explainable AI: Towards a Reflective Sociotechnical Approach

- Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps

- Axiomatic attribution for deep networks

- SmoothGrad: removing noise by adding noise

- A Unified Approach to Interpreting Model Predictions

- Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV)

- Visualizing Deep Neural Network Decisions: Prediction Difference Analysis

- Learning Deep Features for Discriminative Localization

- Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization

- “Why Should I Trust You?”: Explaining the Predictions of Any Classifier

- Feature Visualization

- Understanding Black-box Predictions via Influence Functions

- Estimating Training Data Influence by Tracing Gradient Descent

▸ Uncertainty (click to expand)

- A Baseline for Detecting Misclassified and Out-of-Distribution Examples in Neural Networks

- On calibration of modern neural networks

- Deep Anomaly Detection with Outlier Exposure

- A Simple Unified Framework for Detecting Out-of-Distribution Samples and Adversarial Attacks

- Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning

- Simple and Scalable Predictive Uncertainty Estimation using Deep Ensembles

- Loss Surfaces, Mode Connectivity, and Fast Ensembling of DNNs

- Averaging weights leads to wider optima and better generalization

- Efficient and scalable Bayesian neural nets with rank-1 factors

- What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?

- Addressing Failure Prediction by Learning Model Confidence

- Why M Heads are Better than One: Training a Diverse Ensemble of Deep Networks

- DiverseNet: When One Right Answer is not Enough

▸ Evaluation (click to expand)

- Question and Answer Test-Train Overlap in Open-Domain Question Answering Datasets

- A Metric Learning Reality Check

- Evaluating Weakly-Supervised Object Localization Methods Right

- Do ImageNet Classifiers Generalize to ImageNet?

- Challenging Common Assumptions in the Unsupervised Learning of Disentangled Representations.

- Realistic Evaluation of Deep Semi-Supervised Learning Algorithms

- Zero-Shot Learning-The Good, the Bad and the Ugly

- Sanity Checks for Saliency Maps

- In Search of Lost Domain Generalization

- Are We Learning Yet? A Meta-Review of Evaluation Failures Across Machine Learning

- How to avoid machine learning pitfalls: a guide for academic researchers

- Show Your Work: Improved Reporting of Experimental Results

Policies

▸ Registration & Exercise 0 (click to expand)

The registration period ends on 27 October 2022. During this period, you are required to work on Exercise 0 and submit it.

The Exercise 0 is an individual exercise and will be based on the prerequisite materials for the course.

It will be published right after the first lecture on 21 October 2022 on this web page (see Schedule & exercises section below).

Students who wish to enrol must submit their work on Exercise 0.

To prevent an empty submission, there is a minimal score (30%) for the exercise.

The necessary prerequisite materials will be lectured in the first lecture.

Exercise 0 serves two purposes:

- For us to admit students who are sufficiently motivated for the course.

- For you to gauge your own readiness for the course.

The timeline for registration is as follows:

- Now-20 October 2022: Check time & location for the course.

- 21 October 2022: Attend the first lecture.

- 21-27 October 2022: Work on Exercise 0 & submit.

- 28 October 2022: Register students who have submitted Exercise 0.

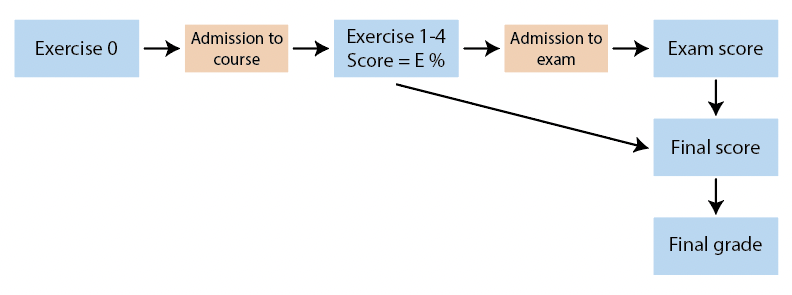

Grading policy

The final grade is essentially based on your exam performance. However, to encourage the participation in the exercises, we award bonus boosts on the final grade based on the exercise performance.

Exercise 0 and registration for course

- We admit only those who submit the zeroth exercise to the course.

- Exercise 0 is an individual exercise.

Exercise 1-3 score E% (0 - 100%)

- Average score from Exercises 1-3.

- Exercises 1-3 are group exercises.

Admission to exam

- When the exercise grade is $\geq 60$%, you are granted the right to participate in the exam.

- Can take care of exceptional cases on an individual basis.

Exam score (0 - 100%)

- Based on your performance on your exam.

- The pass threshold for the course will be $\geq 50$% on the exam.

- There will be two exams: Exam 1 and Exam 2.

- Case A: Sit Exam 1 and pass → Exam 1 score will be final (cannot sit Exam 2).

- Case B: Sit Exam 1 and fail → You may sit Exam 2; Exam 2 score will be final.

- Case C: Choose to skip Exam 2 → Exam 2 score will be final.

Final score (0 - 100%)

- The final score is your exam score with possible increments from your exercise performance.

- Increment from exercise = $(E-60)/40 \times 20$%, where $E$% is your exercise score.

- If $E=100$, you will get 20%p additional points on top of your exam grade.

- If $E=60$, no additional points will be awarded.

- If $E< 60$, you will not be admitted to the exam.

- Increments from exercise will not let you pass the course if your exam score is below $50%$.

Final grade (1,0 - 5,0)

- The final grade of the course will be based on the final score.

Communication

Lecturer

Tutors

Central email

Please use the STAI group email stai.there@gmail.com to

- Submit your Colab exercises;

- Ask questions; and

- Send us feedback.

Discord forum

For those who’re registered for the course, ask the lecturer or tutors to add you to the Discord channel. We need your name and email address. Use it for

- Asking questions;

- Sending us feedback;

- Receiving official announcements; and

- Communicating others (e.g. finding partners).

When & where

Lecture & tutorial location

Seminarraum C118a (Informatik Sand 14) https://goo.gl/maps/mP6Q92s6QcLoHK5v7

Lecture times

- Fridays

- 1st session: 14:15-15:00

- 5-min break: 15:00-15:05

- 2nd session: 15:05-15:50

Tutorial times

- Fridays 16:00-18:00

- Up to discussion

Exam dates and locations

- Exam 1: 10:00 - 11:30, Tuesday 21.02.2023, Hörsaal N04 (Hörsaalzentrum Morgenstelle)

- Exam 2: 13:00 - 14:30, Friday 14.04.2023, Hörsaal N16 (Hörsaalzentrum Morgenstelle)

Schedule & exercises

| # | Date | Content | Exercises | Lead tutor |

|---|---|---|---|---|

| L1 | 21/10/2022 | Introduction | Exercise 0 (Due: 27/10/2022) | Elif |

| L2 | 28/10/2022 | OOD Generalisation - Definition and limitations (Video) | Exercise 1 (Due: 21/11/2022) | Alex |

| L3 | 04/11/2022 | OOD Generalisation - Cue selection problem (Video) | Alex | |

| L4 | 11/11/2022 | OOD Generalisation - Cue selection and adversarial generalisation (Video) | Alex | |

| L5 | 18/11/2022 | More OOD and Explainability - Definition and limitations (Video) | Alex / Elisa | |

| L6 | 25/11/2022 | Explainability - Attribution (Video) | Exercise 2 (Due: 16/12/2022) | Elisa |

| L7 | 02/12/2022 | Explainability - Attribution & evaluation (Video) | Elisa | |

| L8 | 09/12/2022 | Explainability - Model explanation (Video) | Elisa | |

| Christmas Break | ||||

| L9 | 13/01/2023 | Uncertainty - Definition and evaluation (Video) | Exercise 3 (Due: 03/02/2023) | Michael |

| L10 | 20/01/2023 | Uncertainty - Epistemic uncertainty (Video) | Michael | |

| L11 | 27/01/2023 | Uncertainty - Aleatoric uncertainty (Video) | Michael | |

| L12 | 03/02/2023 | Exam information, Final topics, Research @ STAI (Video) | Elif |